what if instead of "responsible disclosure" as the infosec standard it was "responsible resolution"?

As hacker summer camp swings into full gear, I reflect upon the time where I was arrested under suspicion of transforming a Hong Kong university mail server into a 0-day warez site and almost spent time in hacker winter camp. I was, fortunately, 13 at the time and last week I turned 40 which means I've been on the internet now for 29 years...

You know that opening scene of Hackers where journalists are chasing a young kid around trying to get a media scoop? Yeah, that happened to me...

This scene in hackers hits close to home; as a young child reporters camped outside of my family home in Hong Kong and ended up filing a story which listed my fathers name, who his clients were and not long afterwards we left the country.

That moment in time had a profound impact on my life but it wasn't until seven years later when the operation buccaneer investigation became public and arrests were made that I realised just how fucking dumb I had been.

An undercover operation began in October 2000. On December 11, 2001, law enforcement agents in six countries targeted 62 people suspected of violating software copyright, with leads in twenty other countries.

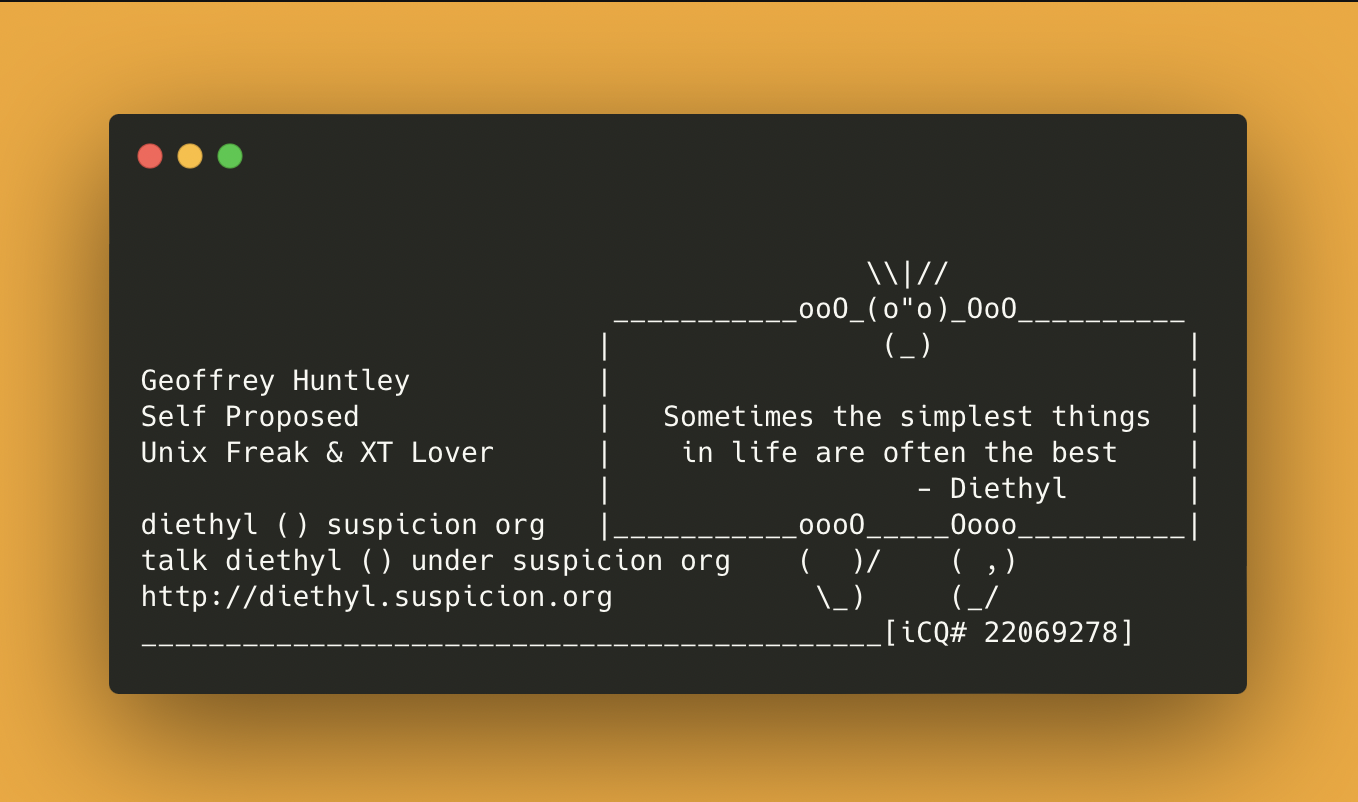

You see. Michael Kelly aka "eRUPT" from DrinkOrDie, AMNESiA, CORP, RiSC who ran the botnets for those warez groups taught me software programming.

Michael, I wouldn't have become a software developer without your help but I'm glad our relationship never extended past yourself answering questions related the programming language Tcl and teaching me how the software 👇 that likely provided encrypted communications for the groups worked.

Where ever you are now, thanks I guess? Hope all is well. I'm not even sure you knew I was 13 or if you even remember me. I'm 40 now and it has been over 24 years now since I was involved in any form of internet shennigangs and to be clear I had no affiliations with the previous mentioned groups but, at 13, I did however turn that mail server (which had oh so much disk space) into a 0-day site.

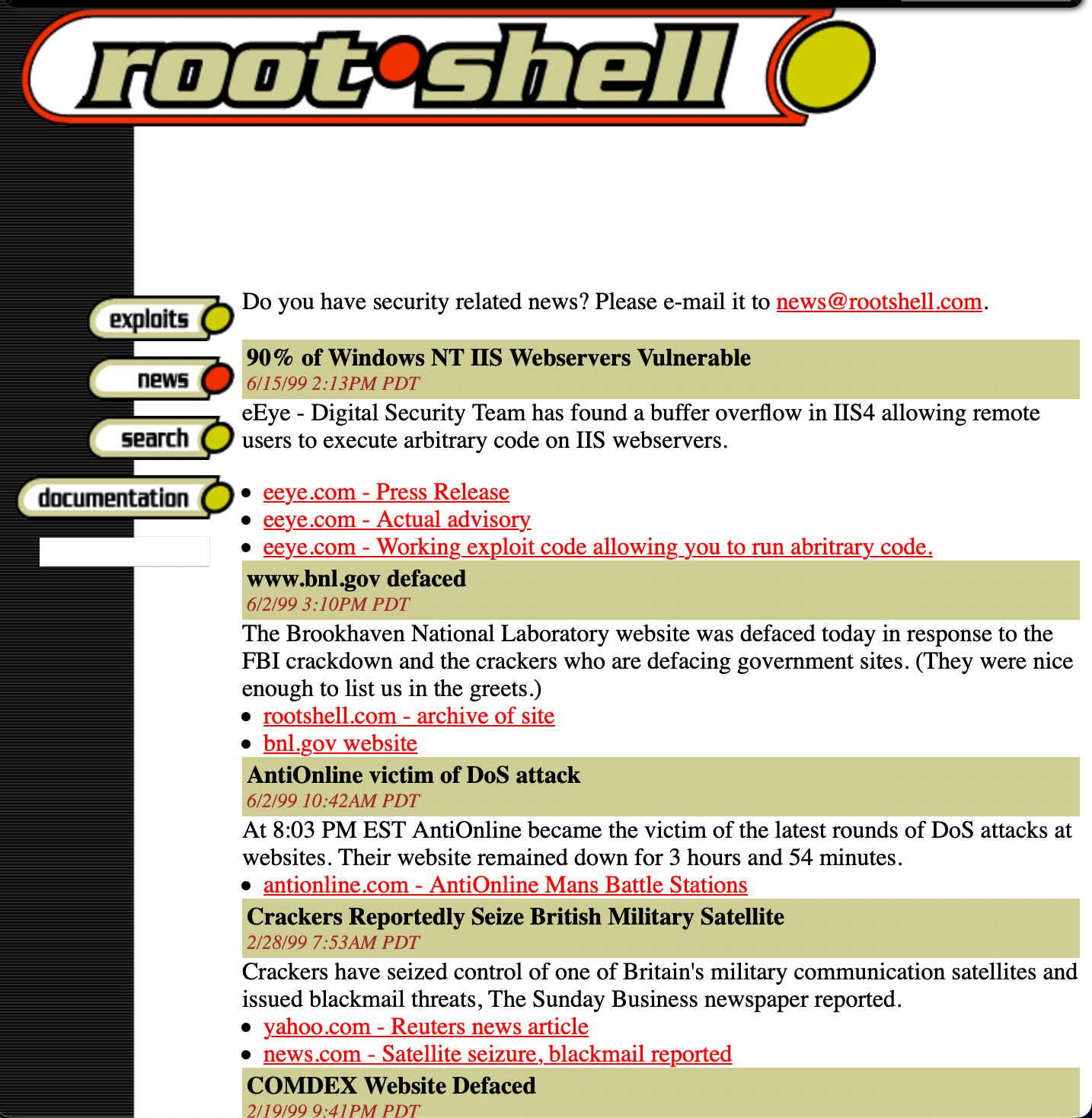

Back in 1995 the internet was different. It really was the wild west and tbh you would be hard pressed to find anyone who is currently in a senior technical leadership capacity who doesn't have a story similar to above.

# "hacking like it's 1995"

echo "+ +" >~/.rhostsBack then infosex knowledge was, typically, sourced through IRC and the following three publications:

and motivations were about exploration, sharing of knowledge and fucking with people for fun. My favourite exploit of all time to this day still remains the 2B2B2B41544829 attack where people, such as myself, would configure eggdrops to automatically issue the following command which would cause peoples modems to disconnect from the internet upon joining a commmunity chat server.

# if you didn't know their IP address

/ctcp #windows PING +++ATH0

# if you knew their IP address

ping -c 5 -p 2B2B2B41544829 <target IP address>

2B2B2B41544829 was a crude way of filtering the community and ensuring only technical people who knew how to configure an eggdrop instance / IRC bouncer or that had an ISDN or OC3 connection could participate. In the places I hung out as a young kid we used it to combat the Eternal September phenomenon:

If there were technical folks we wanted to evict from the community then reflection based attacks such as Smurf and Fraggle were used instead until they deeply understood that they were no longer welcome.

but the internet and the infomation security sector has changed (and matured) so much since then but two things remains true.

hacking should not be a crime...

What most people think hacking is versus what hacking really is. pic.twitter.com/CB8bKJ8h5i

— Hacking is NOT a Crime (@hacknotcrime) August 4, 2020

researchers don't owe you shit

Again and again, I see these words of "being responsible" when disclosing security research being cargo culted in our industry but I think the term is bullshit because it absolves the company with the problem of responsibility.

Something I've been pondering about for a long time was what if instead of "responsible disclosure" as the industry standard it was "responsible resolution"?

That one small flip - switches the responsibility from the researcher to the company to resolve. If the company does not resolve the problem within a community accepted timeframe then said company is deemed as being irresponsible. Over time companies that are deemed irresponsible would face higher and higher cyber insurance premiums and thus would be incentivised to actually fix their shit.

Time, again and again I see companies trying to funnel security researchers into a process with non-disclosure agreements that involves jumping through numerous hoops all whilst forgetting that researchers don't owe you shit.

"Why would I ever sign an NDA for the privilege of telling you what’s wrong with you?" @k8em0

— Dennis (@DennisF) August 11, 2022

If you want to keep the abba singing haxors at bay, the #1 thing any responsible company can do is to serve them with gold fucking platter service.

Yeah well it's #infosex hacker time to ABBA pic.twitter.com/U1XkQnyAZ0

— John Jackson (@johnjhacking) October 5, 2021

and have the following patterns configured. If you don't have them then sorry your company is not responsible and you don't have a leg to stand on until they are..

website.com/.well-known/security.txt

DNS Security TXT

website.com/security

Your company website should have all topics related to security in a single place and the page should be designed for the personas of CISO (customer) and "I've found a security issue and need to contact someone" (white-hat hacker).

I suggest using /security as the URL because it's guessable.

website.com/security/report

A place where people can submit reports. Can be a redirect to your email address found in the security.txt or to a bug bounty platform if you decide to head down that route (but remember; security researchers don't owe you shit and seniors will often just leak the tea to the press upon sight of a bounty platform).

website.com/security/policy

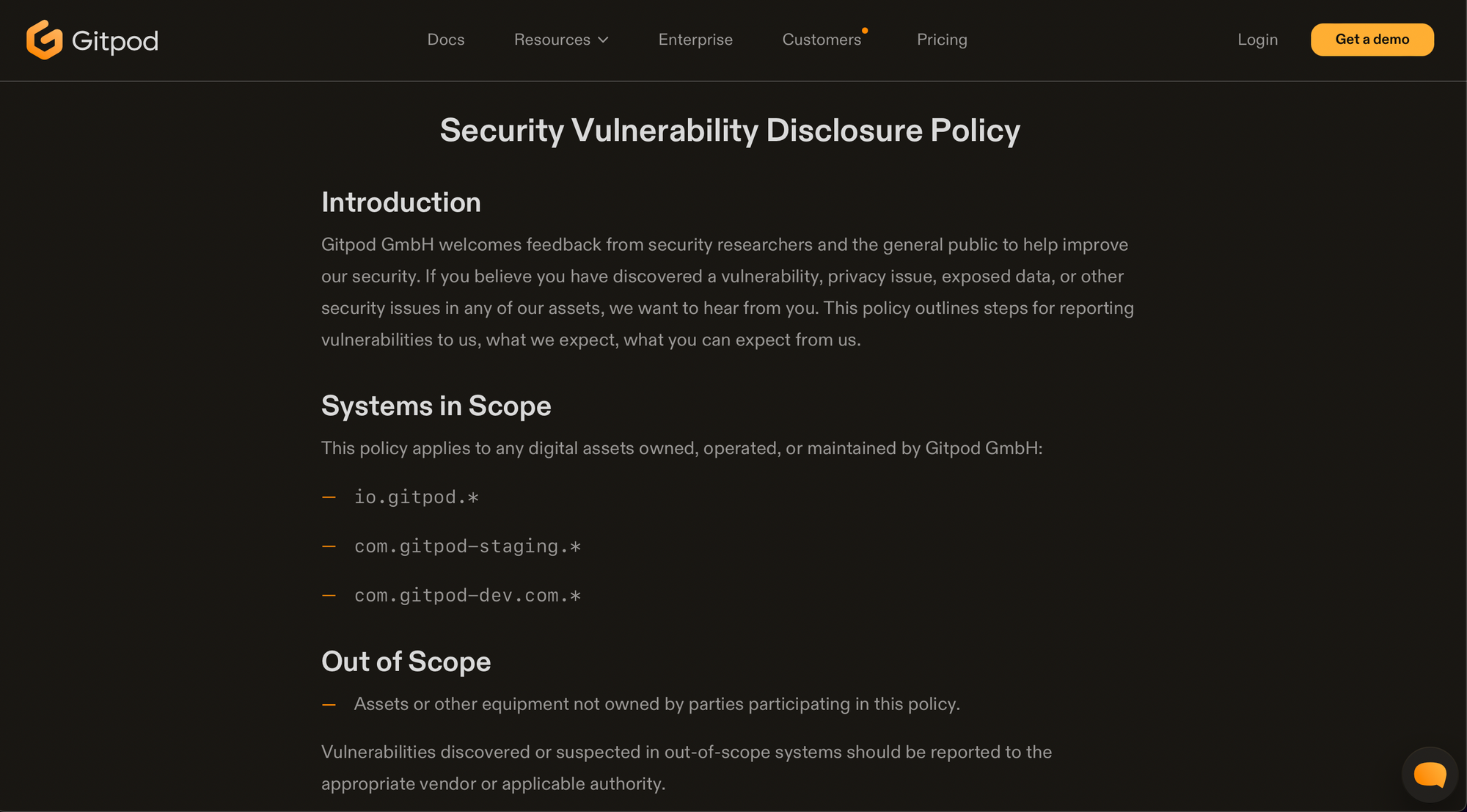

If you don't have a policy then start the journey at

Please customise the above as needed. At Gitpod, I added a paragraph nudging security researchers that they should do their research on the self-hosted edition of Gitpod (because it is the same codebase as Gitpod) and provided clarity as to which systems are in scope.

I suggest using /security/policy as the URL because it's guessable and builds on the /security convention.

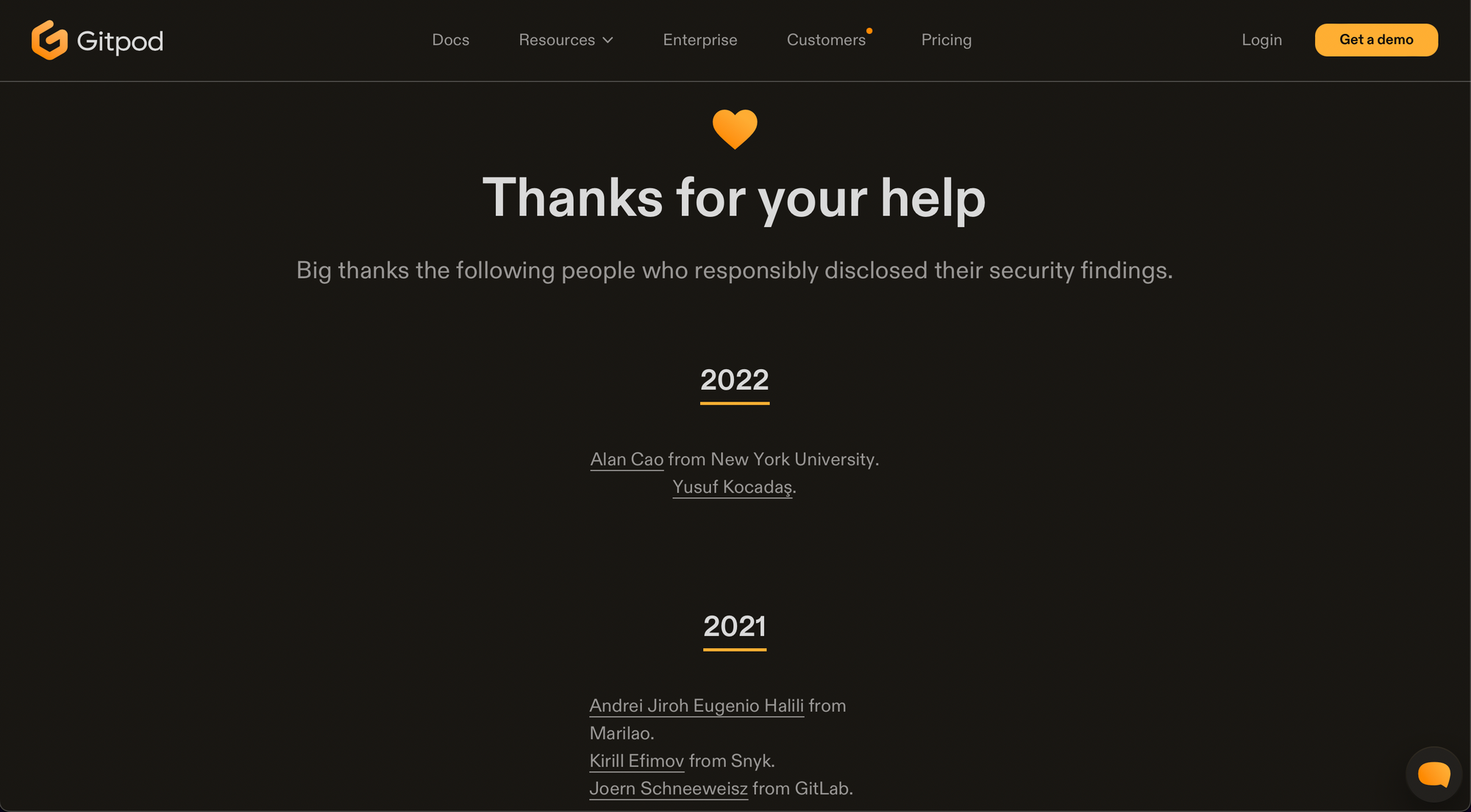

website.com/security/thanks

Whitehat security researchers start at the bottom, typically running nessus scanners against infrastructure against "an agreed scope of machines" (lol). The way to escape out of the hell that is authoring pentest reports is through collecting citations that researcher can put on their resume that acknowledges that they did indeed find a security issue and that the person is in good standing with the company.

By offering a wall-of-fame, you make it easier for researchers to stay in good standing, become a desirable company that security researchers want to work at, and offer a service that can help juniors grow through the ranks in the infosec industry.

I suggest using /security/thanks as the URL because it's guessable and builds on the /security convention.

security@example.com

This one is simple but I see it get fucked up again and again. The security@ email address should be staffed and triaged by engineers. The email address should NOT go to physical building security - use something like facilities@example.com for that. 99.99% of media incidents and PR crisises due to researchers being unable to contact the company could have been adverted with this advice alone.

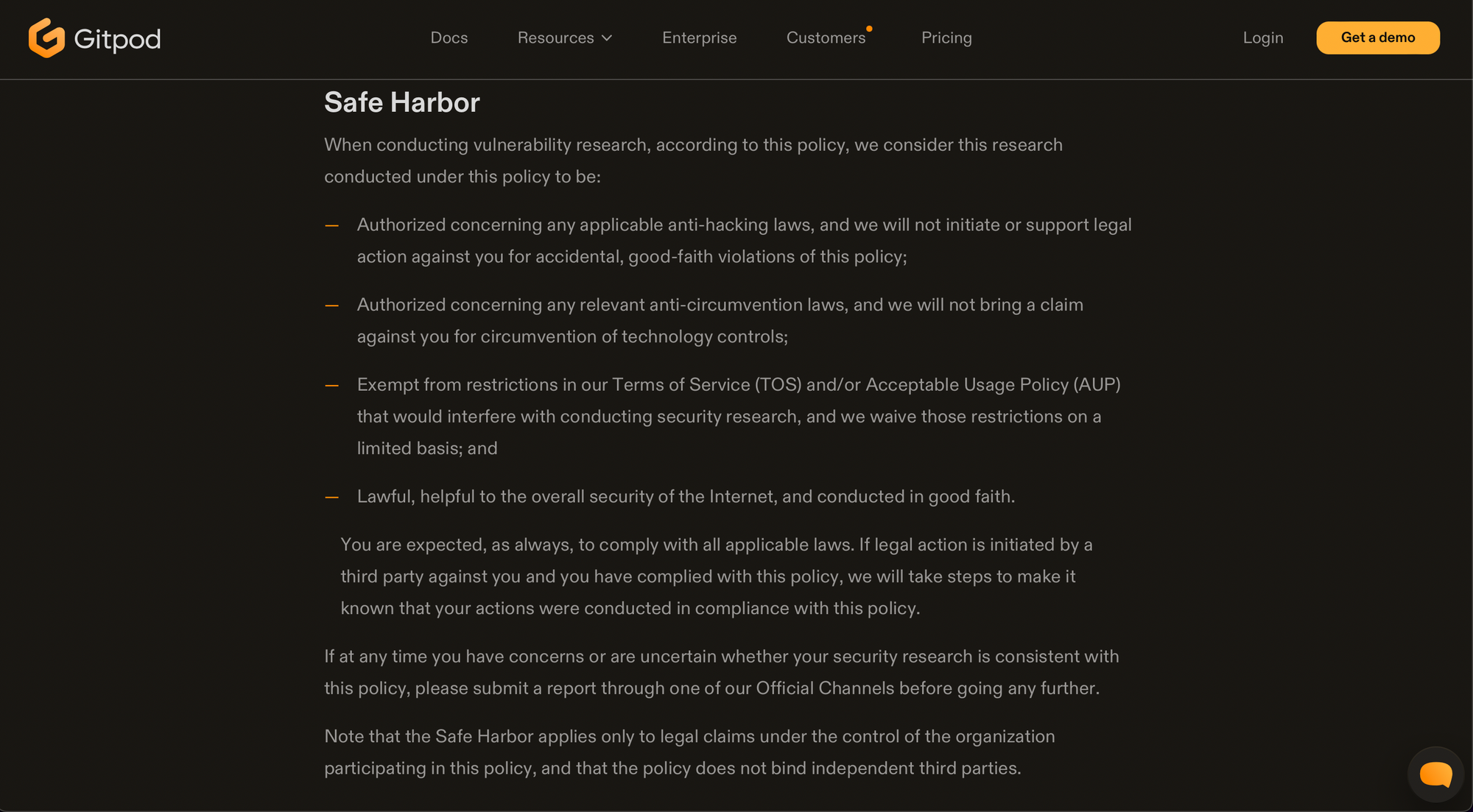

safe harbor provisions

The laws are murky when it comes to responsible disclosure security research but by shipping a safe harbor provision in your security policy (/security/policy) you will assist security researchers caught in the gulf between legality and disclosure.

If you are unfamiliar with the concept then drop by below.

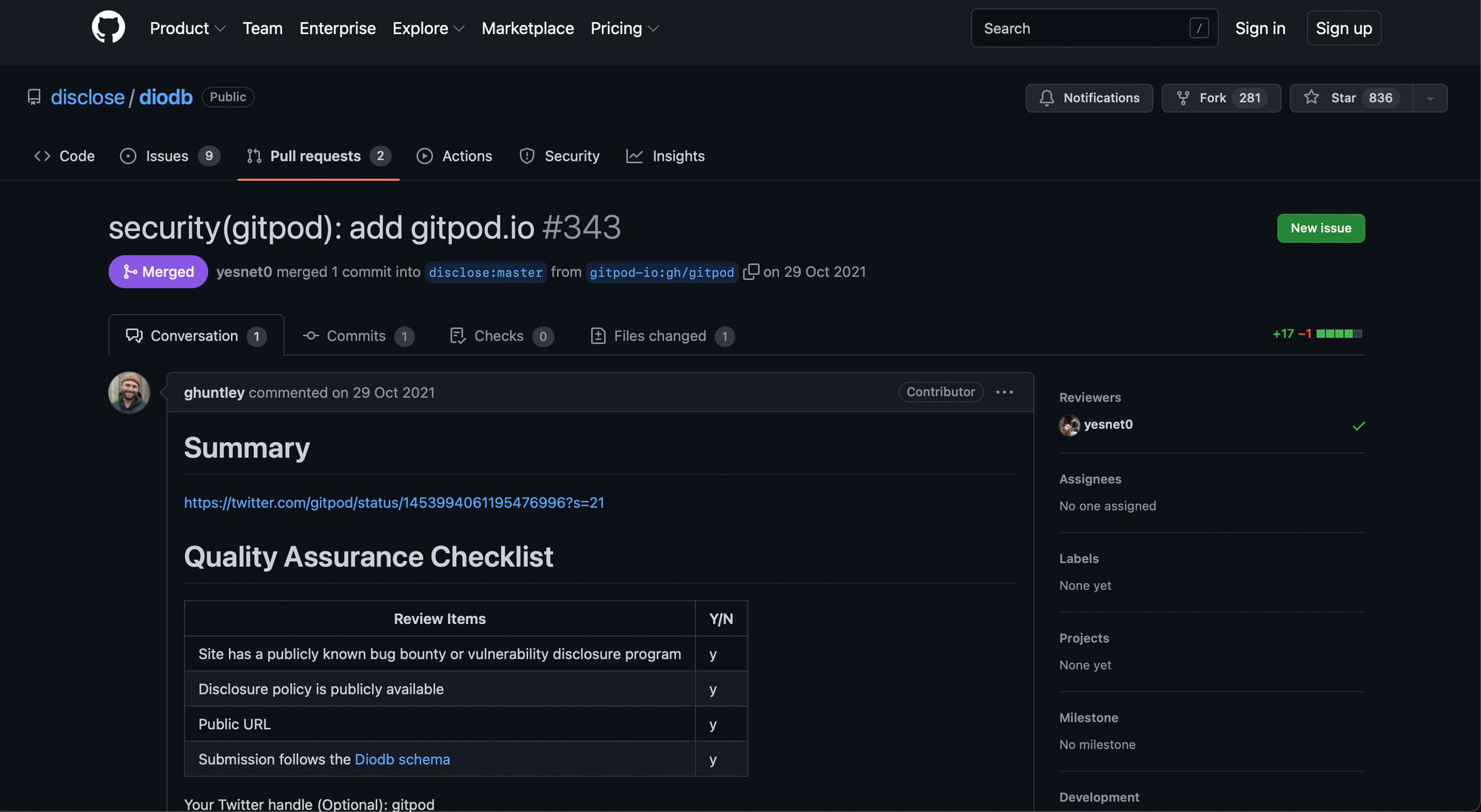

publish your company in the dio database

Did you know there is a database that security applicances, whitehats and professionals turn to when they discover something that just isn't right? It's open-source and your company should be in it!

Welcome to the #diodb @adahealth @gitpod @definitynetwork @84codes @Optionsit @recidiviz @BritishGas @ethereum @antavo @GovCERT_CH!

— disclose.io (@disclose_io) October 29, 2021

...and shoutout to @GeoffreyHuntley @nikitastupin @ChaseJ @springmoon6 @yabeow @meszicsaba @caseyjohnellis @sickcodes for the PRs <3 https://t.co/ifvS3bRj9q

be accessible and be a desirable place to work for

Send your employees to hacker summer camp and encourage them to attend those random ass vigils for cockroaches. By being actively known in the community (and it is incredibly small) and accessible many public relations issues can be mitigated. It also doubles as a way to build out a talent pipeline you can hire from for your current (or future) security program...

@TrevorTheRoach memorial at @derbycon. #TrevorForget 2018. RIP Trevor you brave little roach. pic.twitter.com/nUBDmnmPmF

— ʝօʄʄ ȶɦʏɛʀ 🇦🇺🇺🇸 ʟօʋɛ, ʀɛֆքɛƈȶ, ӄռօաʟɛɖɢɛ (@joff_thyer) October 6, 2018

🙇♂️ thanks for reading...

As hacker summer camp swings into full gear, I reflect upon the time where I was arrested under suspicion of transforming a Hong Kong university mail server into a 0-day warez site and almost spent time in hacker winter camp...https://t.co/5D9E23xRxx

— GEOFF 🦩🎼 (@GeoffreyHuntley) August 11, 2022