I dream about AI subagents; they whisper to me while I'm asleep

In a previous post, I shared about "real context window" sizes and "advertised context window sizes"

Claude 3.7’s advertised context window is 200k, but I've noticed that the quality of output clips at the 147k-152k mark. Regardless of which agent is used, when clipping occurs, tool call to tool call invocation starts to fail

The short version is that we are in another era of "640kb should be enough for anyone," and folks need to start thinking about how the current generation of context windows is similar to RAM on a computer in the 1980s until such time that DOS=HIGH,UMB becomes a thing...

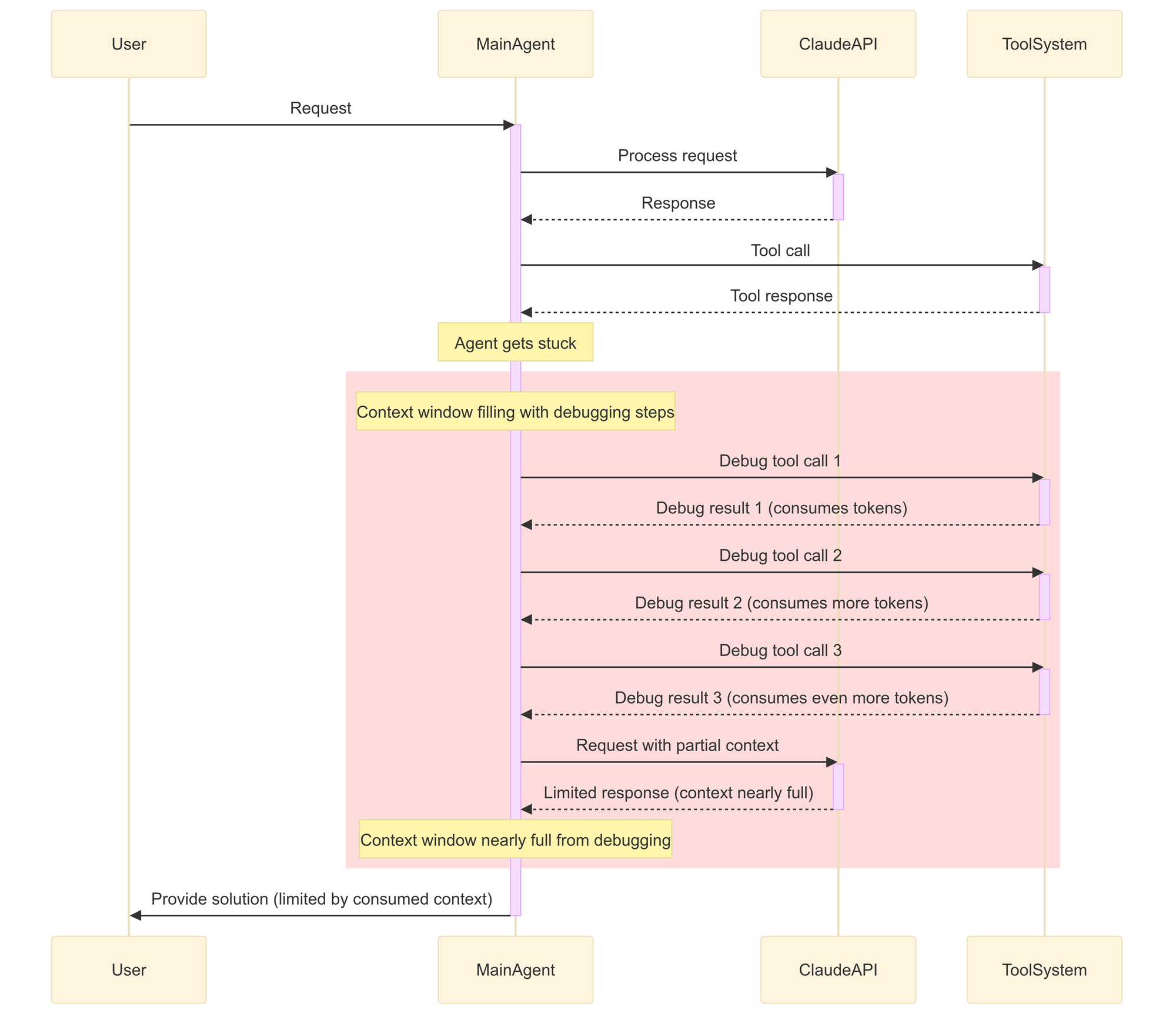

The current generation of coding agents work via a tight evaluation loop of tool calls to tool calls that operate within a single context window (ie. RAM). However, the problem with this design is that when an LLM provides a bad outcome, the coding assistants/agents' death spiral and brute force on the main context window which consumes precious resources as it tries to figure out the next steps.

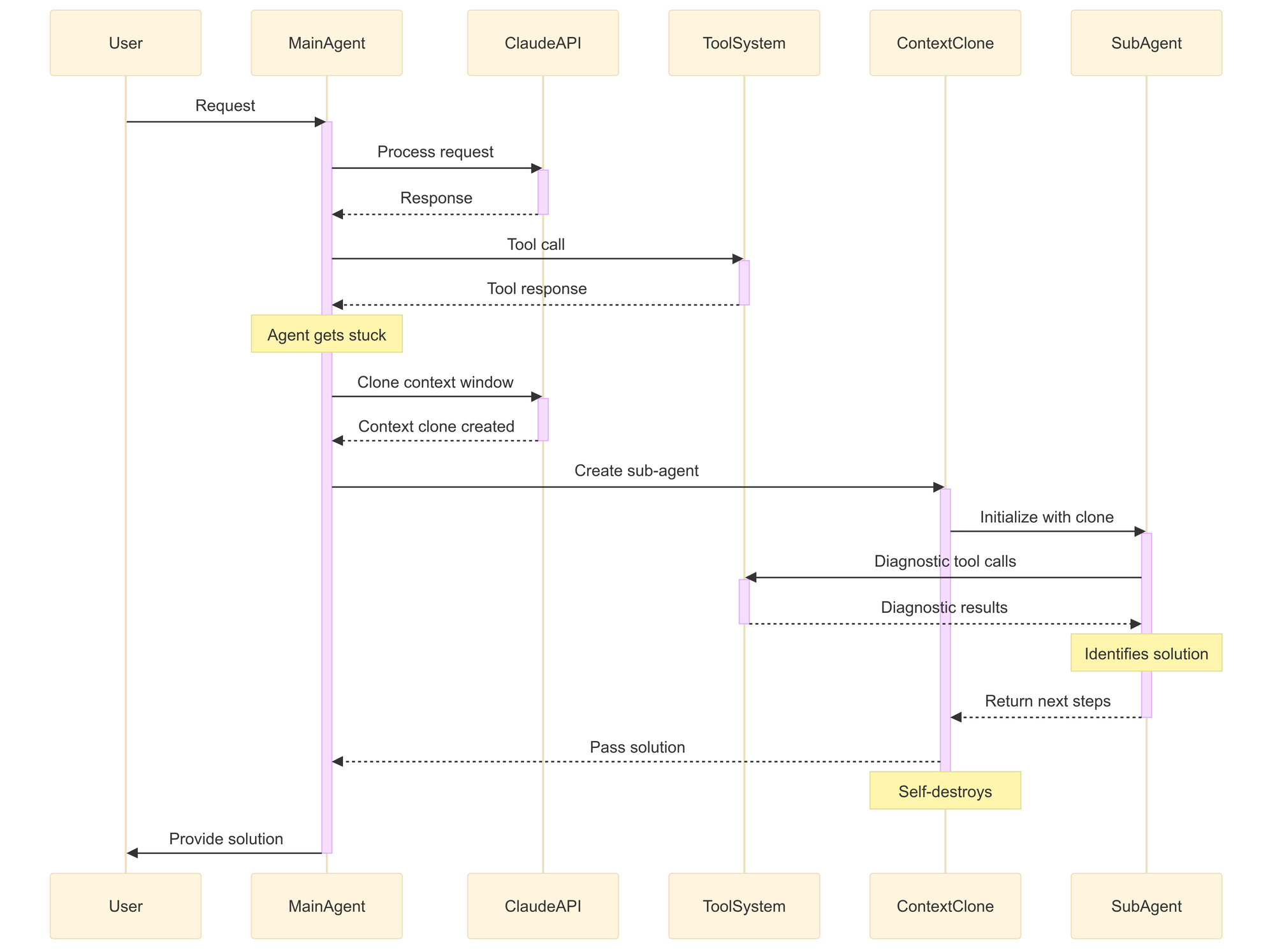

However, I've been thinking: What if an agent could spawn a new agent and clone the context window? If such a thing were possible, it would enable an agent to spawn a sub-agent. The main agent would pause, wait for the sub-agent to burn through its own context window (ie. SWAP), and then provide concrete next steps for the primary agent.

It's theoretical right now, and I haven't looked into it. Still, I dream of the possibility that in the future, software development agents will not waste precious context (RAM) and enter a death spiral on the main thread.

p.s. socials

- LinkedIn: https://www.linkedin.com/posts/geoffreyhuntley_i-dream-about-ai-subagents-they-whisper-activity-7316994087725813762-YuJX

- Twitter: https://x.com/GeoffreyHuntley/status/1911237235644809466

- BlueSky: https://bsky.app/profile/ghuntley.com/post/3lmnv43hmk32d

pps. extra reading

"You see this [breakdown] a lot even in non-coding agentic systems where a single agent just starts to break down at some point." - Shrivu Shankar