A Model Context Protocol Server (MCP) for Microsoft Paint

Why did I do this? I have no idea, honest, but it now exists. It has been over 10 years since I last had to use the Win32 API, and part of me was slightly curious about how the Win32 interop works with Rust.

Anywhoooo, below you'll find the primitives that can be used to connect Microsoft Paint to Cursor or ClaudeDesktop and use them to draw in Microsoft Paint. Here's the source code.

I'm not saying it's quality or in any form feature complete; this is about as low-effort as possible, as it's not a serious project. If you want to take ownership of it and turn it into a 100% complete meme, get in touch.

It was created using my /stdlib + /specs technical patterns to drive the LLM towards successful outcomes (aka "vibe coding")

/stdlib

/specs

If you have read the above posts (thanks!), hopefully, you now understand that LLM outcomes can be programmed. Thus, any issue in the code above could have been solved through additional programming or better prompting during the stdlib+specs phase and by driving an evaluation loop.

show me

how does this work under the hood?

To answer that, I must first explain what model context protocol is about as it seems like everyone's buzzing about it at the moment, with folks declaring it as "the last API you will ever write" (which curmudgeons such as myself have heard N-times before) or the "USB-C of APIs", but none of those explanations hits home as a developer tooling engineer.

To MCP or not to MCP, that's the question. Lmk in comments

— Sundar Pichai (@sundarpichai) March 30, 2025

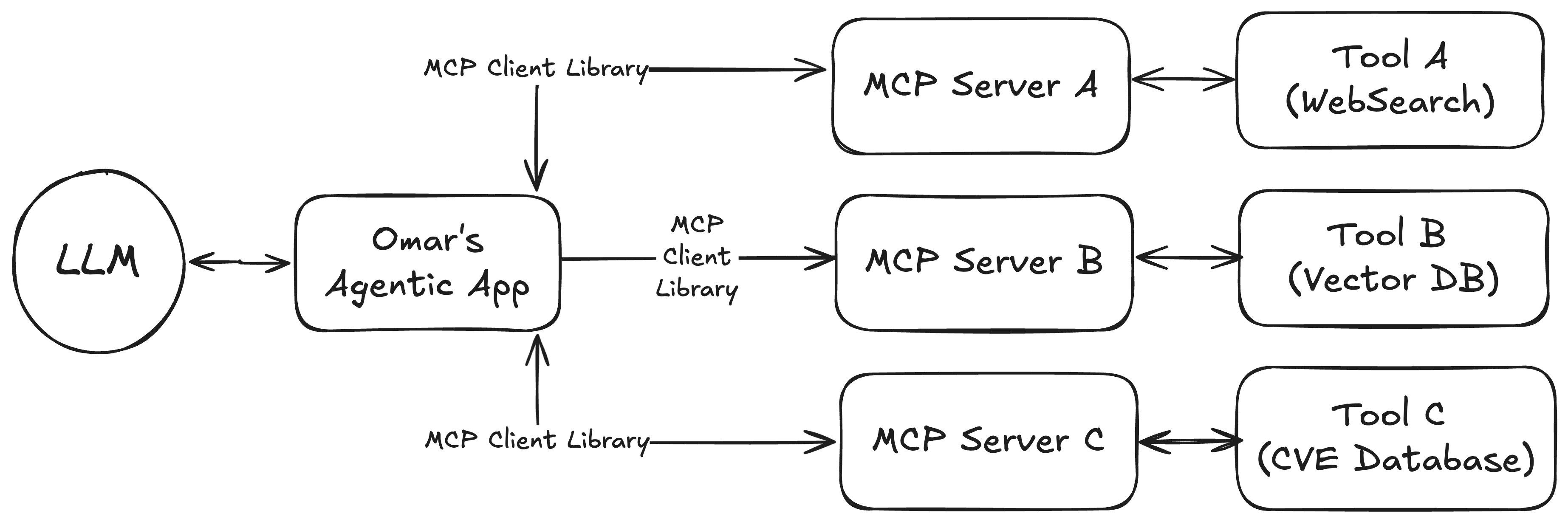

First and foremost, MCPs are a specification that describes how LLMs can remote procedure call (RPC) with tools external to the LLM itself.

There are a couple of different implementations (JSON-RPC STDIO and JSON-RPC over HTTPS), but the specification is rapidly evolving, so it's not worth covering here. Refer to https://spec.modelcontextprotocol.io/specification/2025-03-26/ for the latest specification and the article below to understand what this all means from a security perspective...

Instead, let's focus on the fundamentals for engineers who seek to automate software authoring—tools and tool descriptions—because I suspect these foundational concepts will last forever.