tricks from a marketers handbook: identifying enterprise buying intent

Back in 2004, I created a content delivery network and one of the world's first video blogs which peaked as the 1901st most visited website in the world and was interviewed by the Washington Post, and the BBC and was published in various newspapers at a ripe age of 20.

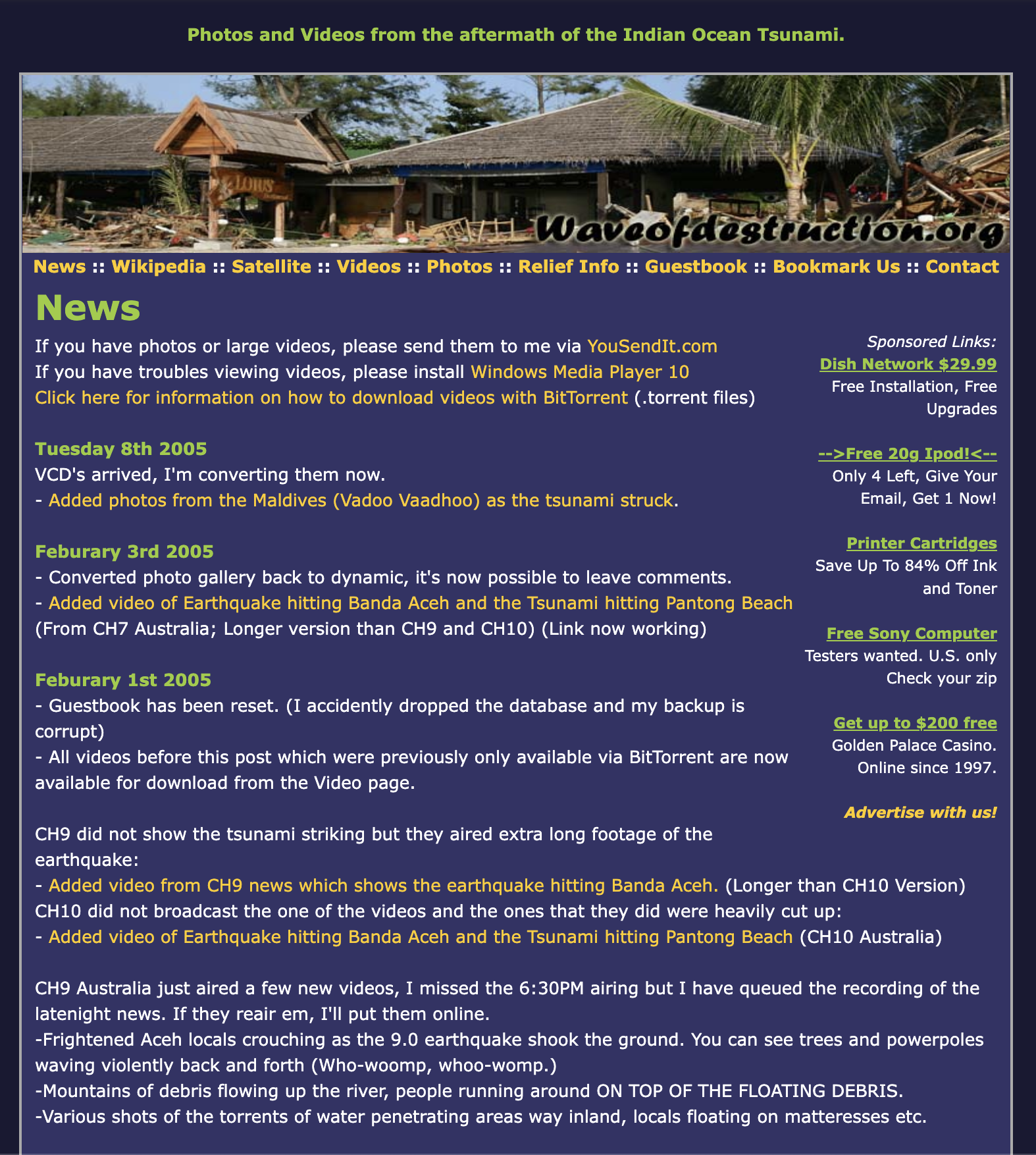

Waveofdestruction.org was created on December 28, 2004, to serve as a central location for all videos/photos related to the tsunami. Word spread quickly and much of the content was featured in newspapers and on network TV, current affairs and news programs worldwide.

The website was featured in a TED talk as an example of "when social media became the news". The 2004 Indian ocean earthquake and tsunami was a moment that demonstrated how the internet can surpass, or at least complement, traditional news media – even in terms of delivering multimedia content.

Today we accept social media as the defacto default as a primary source of information but before the tsunami, the media outlets were number 1. The year 2004 was a time before YouTube, Twitter, Instagram and social media in general but the exact same techniques and lessons from 18 years ago I still use today to grow something from nothing.

growth doesn't happen without deliberate design

Waveofdestruction.org had a simple start. When I launched the domain wasn't even registered. Videos and photos of the tsunami were appearing online and I was fortunate enough to have colo-space, a couple of spare bare metal machines (Pentium 3s with IDE hard drives!) and plenty of bandwidth.

As YouTube, Twitter, and Instagram didn't exist people were hosting this content on their own ISP web hosting. Back then internet service providers gave their subscribers circa 100 MB to 200 MB of storage. Unfortunately, the amount of bandwidth allocated to each account was very tiny so as soon as a new piece of content came available it soon disappeared from the internet.

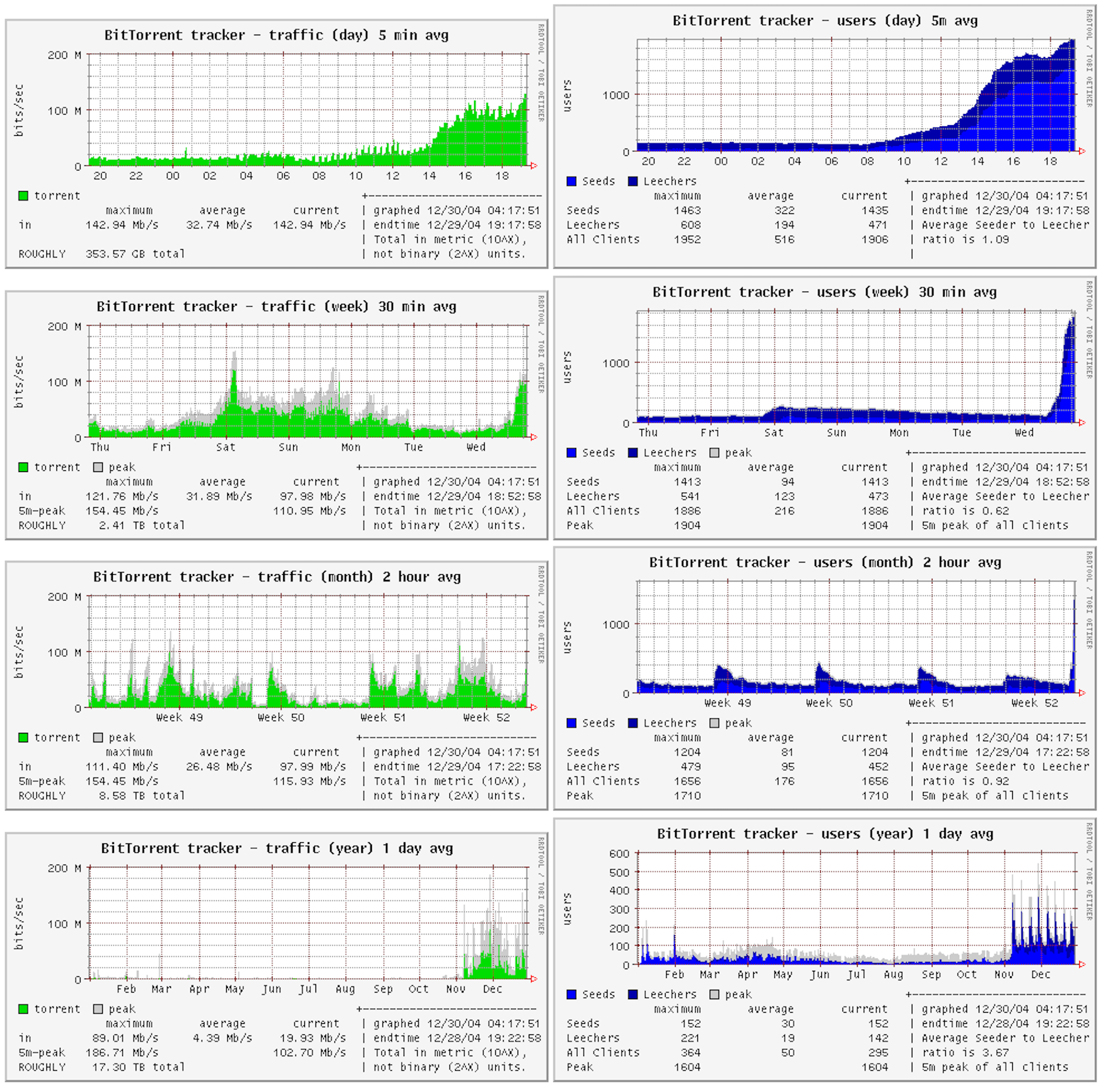

So, I started mirroring the videos and photos on my bare-metal servers and set up a content delivery network consisting of rsync mirrors to other people's computers and serving content via BitTorrent as well as direct downloads.

One of the key things behind the growth of the website and the creation of awareness of the website was raw http logs.

raw http logs are an untapped goldmine

When someone visits a website a request is sent to the webserver as follows

127.0.0.1 - frank [10/Oct/2000:13:55:36 -0700] "GET /apache_pb.gif HTTP/1.0" 200 2326 "http://www.example.com/start.html" "Mozilla/4.08 [en] (Win98; I ;Nav)"

Where /apache_pb.gif is the resource that was requested and http://www.example.com/start.html is where the traffic was coming from.

Most people reach for traditional tools such as Google Analytics and the like when doing marketing activities today but this is the wrong approach.

If you are building developer tools understand that your target demographic typically uses Adblock browser extensions or blocks JavaScript which means that unless you study the raw http logs then you won't be getting a proper understanding of where your traffic is coming from.

understand where your traffic is coming from

As traffic started flowing in. I spent many sleepless nights using bash, grep and tail to monitor where traffic was coming from. When a new referer came through I went to the community and origin of the traffic and engaged them there where they are.

Eventually hopping between these communities to post updates when new media was discovered became too time intensive so a decision was made. I broke existing links and redirected all traffic back to the Waveofdestruction.org homepage which essentially inverted the flow of traffic and established the website as the central authority for all matters related to the tsunami.

what to look for

Over 18 years have passed since the creation of that website but to this day I still reach for raw http logs first because the insights they reveal are powerful and they represent the whole truth.

Today, I'm going to reveal one of the tricks and things I look for in raw http logs as part of my day-to-day when working with clients.

If you are building a product that targets the enterprise understand that the enterprise typically either self-hosts its infrastructure or purchases products from SaaS vendors.

If the enterprise company self-hosts its infrastructure then you need to keep an eye out for the following http referer strings:

jira.[*.]companyname.comgitlab.[*.]companyname.comgithub.[*.]companyname.comconfluence.[*.]companyname.comwiki.[*.]companyname.com*.corp.companyname.com*.int.companyname.com*.aws.companyname.comsharepoint.[*.]companyname.comintranet.[*.]companyname.com

If the enterprise company purchases products from SaaS vendors then understand that it's common for these SaaS vendors to create tenants for each one of their customers under a unique cname that is typically the name of the customer. Keep an eye out for the following http referer strings.

companyname.zoom.comcompanyname.webex.comcompanyname.atlassian.netcompanyname.feishu.cn

identify enterprise buying intent

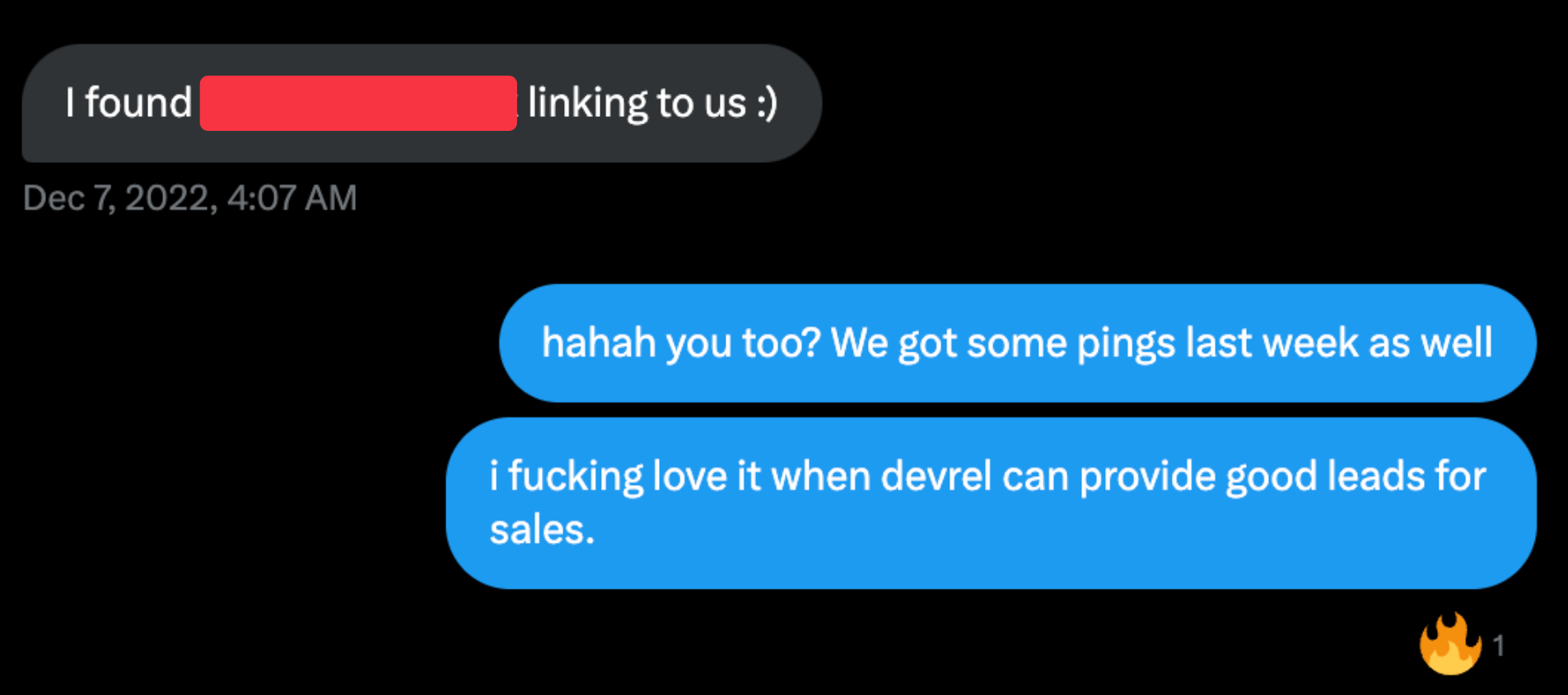

By keeping a careful eye on http referer traffic it is possible to create a feedback loop from marketing that helps sales teams with their planning, measure lead interest and help with timing (or approach of) sales-related activities to close deals. Here's how.

- If you see http referers from zoom/webex/feishu then people are aware of your product and are discussing your tool internally within their enterprise.

- If you see http referers from confluence or sharepoint then you potentially have an internal advocate within the company who has started a discussion about your tool. If that advocate links to particular pages then this information should be fed back to product managers to help prioritise the product roadmap (ie. which features should be developed or which web browsers should be supported) and to the sales team to help with measurement if a lead is hot or cold.

- If you see http referers from Jira, GitLab or GitHub then congratulations. The enterprise company has moved past discussion and into action. They are either starting a proof of concept (and someone has been tasked with the installation/configuration of your product) or are moving towards a production installation. Feed this information back to your sales team.

automate it in the simplest fashion possible

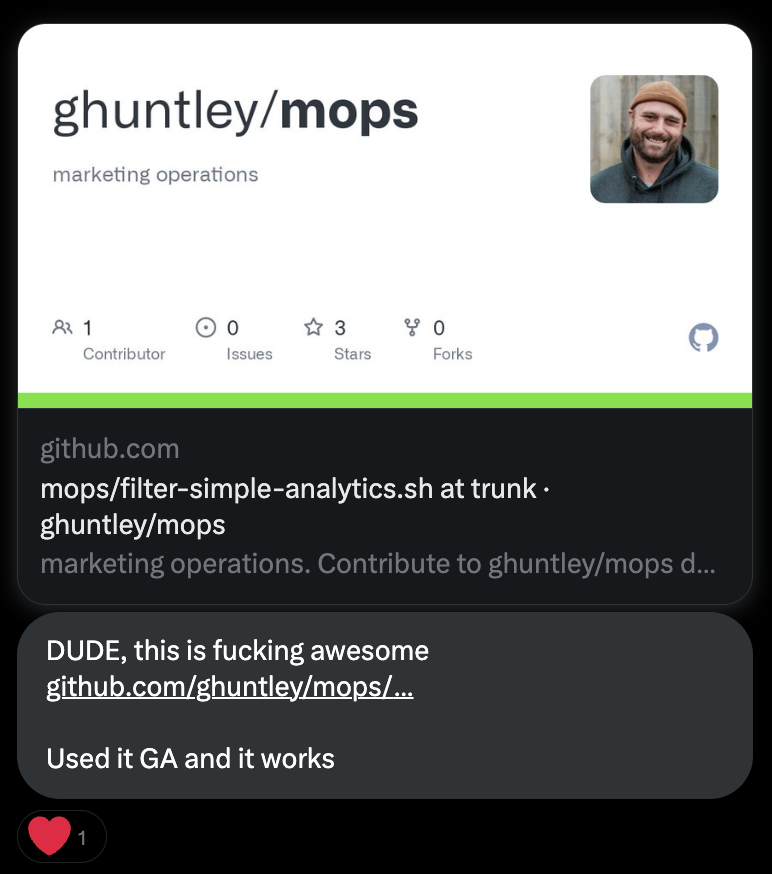

Over at https://github.com/ghuntley/mops you'll find an implementation of the above which uses the Gitscraper pattern to download and process raw http logs, extract the identifiers of interest and store the daily diffs as Git commits via GitHub Actions.

i still use these same techniques today

The year 2004 was a time before YouTube, Twitter, Instagram and social media in general but the exact same techniques and lessons from 18 years ago I still use today to grow something from nothing:

- launch with the smallest possible thing.

- sleep before a product launch and don't sleep for 72+ hrs when there's a fish on the line.

- go to where people are talking about you and engage in their community where they are.

- look for growth opportunities through studying http referer logs and execute.

- share insights found through studying http referer logs with other people to help them do their job.

Thanks for reading, I hope these grep filters help you win/close more sales and with demonstrating value that is measurable and that isn't a vanity metric.

This is a great set of tips for dev marketing and can confirm you see some interesting referer domains in the logs that can help guide your GTM team https://t.co/tqMfEzP0cu

— Beyang (@beyang) March 9, 2023

Geoff has been doing impactful Dev rel and community growth work for a long time. This post is worth a read. Also - he's looking for a new gig, so hit him up. https://t.co/tECqrhYN8w

— Disco N Tinuity (@monkchips) March 22, 2023

I'm currently looking for my next role in developer marketing/developer relations/developer experience engineering. If you’ve got a position in mind or an interesting project you want to get off the ground send an email to ghuntley@ghuntley.com for a confidential discussion. Thank you.