Claude Sonnet is a small-brained mechanical squirrel of <T>

This post is a follow-up from LLMs are mirrors of operator skill in which I remarked the following:

I'd ask the candidate to explain the sounds of each one of the LLMs. What are the patterns and behaviors, and what are the things that you've noticed for each one of the different LLMs out there?

After publishing, I broke the cardinal rule of the internet - never read the comments and well, it's been on my mind that expanding on this points and explaining it in simple terms will, perhaps, help others start to see the beauty in AI.

let's go buy a car

Humble me, dear reader, for a moment and rewind time to the moment in time when you first purchased a car. I remember my first car, and I remember specifically knowing nothing about cars. I remember asking my father "what a good car is" and seeking his advice and recommendations.

Is that visual in your head? Good, now, fast-forward time back to now here in the present to the moment when you last purchased a car. What car was it? Why did you buy that car? What was different between your first car-buying experience and your last car-purchasing experience? What factors did you consider in your previous purchase that you perhaps didn't even consider when purchasing your first car?

there are many cars, and each car has different sounds, properties and use cases

If you wanted to go off-road 4WD'ing, you wouldn't purchase a hatchback. No, you would likely pick up a Land Rover 40 Series.

Likewise, if you have (or are about to have) a large family then upgrading from a two door sports car to "something better and more suitable for family" is the ultimate vehicle purchased upgrade trope in itself.

Now you might be wondering why I'm discussing cars (now), guitars (previously), and later on the page, animals; well, it's because I'm talking about LLMs, but through analogies...

LLMs as guitars

Most people assume all LLMs are interchangeable, but that’s like saying all cars are the same. A 4x4, a hatchback, and a minivan serve different purposes.

there are many LLMs and each LLMs has different sounds, properties and use cases. most people think each LLM is competiing with each other, in part they are but if you play around enough with them you'll notice each provider has a particular niche and they are fine-tuning towards that niche.

Currently, consumers of AI are picking and choosing their AI based on the number of people a car seats (context window size) and the total cost of the vehicle (price per mile or token), which is the wrong way to conduct purchasing decisions.

Instead of comparing context window sizes vs. m/tok costs, one should look deeper into the latent patterns of each model and consider what their needs are.

For the last couple of months, I've been using different ways to describe the emergent behaviour of LLMS to various people to refine what 'sticks and what does not'. The first couple of attempts involved anthropomorphism of the LLMs into Animals.

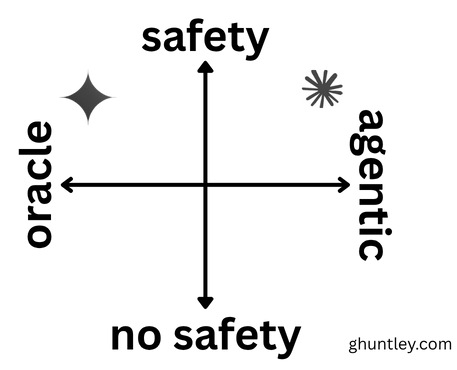

Galaxy brained precision based slothes (oracles) and incremental small brained hyperactive incremental squirrels (agents).

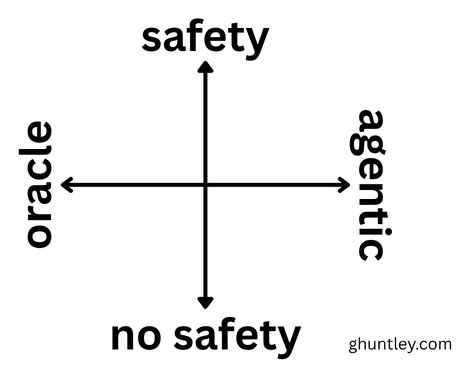

But I've come to realise that the latent patterns can be modelled as a four-way quadrant.

For example, if you’re conducting security research, which LLM would you choose?

Grok, with its lack of restrictive safeties, is ideal for red-team or offensive security work, unlike Anthropic, whose safeties limit such tasks.

If you needed to summarise a document, which LLM would you choose?

For summarising documents, Gemini shines due to its large context window and reinforcement learning, delivering near-perfect results.

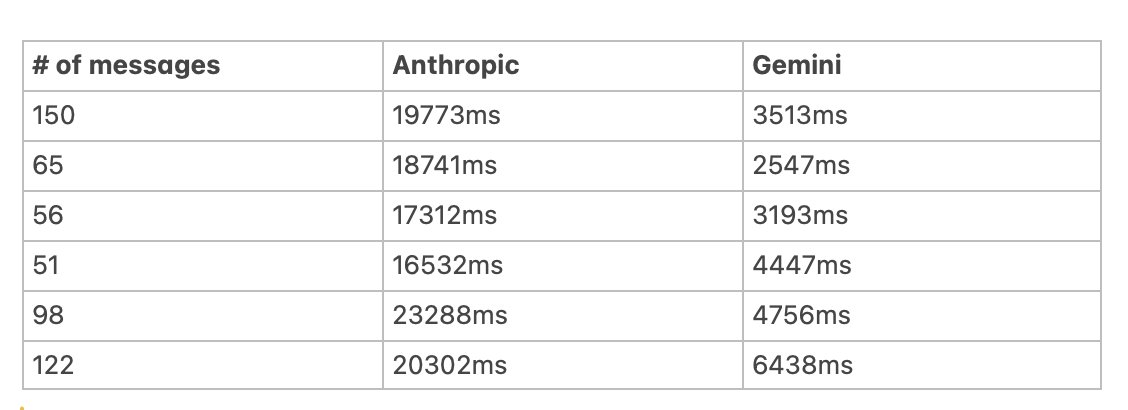

We recently switched Amp to use Gemini Flash when compacting or summarising threads. Gemini Flash is 4-6x faster, roughly 30x cheaper for our customers, and provides better summaries, compacting a thread or creating a new thread with a summary.

However, that's the good news. In its current iteration, Gemini models just won't do tool calls.

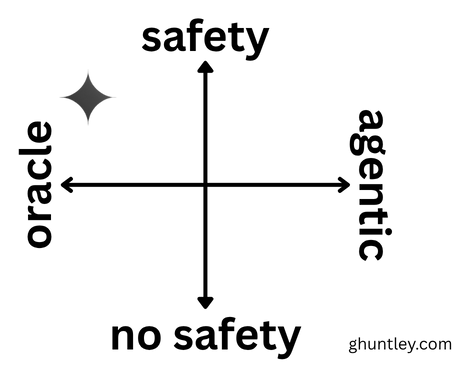

Gemini models are like a galaxy brained sloths that won't chase an agentic tool call reward functions.

This has been known for the last three months, but I suppose the recent launch of the CLI has brought it to the attention of more people, who are now experiencing it firsthand. The full-size Gemini models aim for engineering perfection, which, considering who made Gemini, makes perfect sense.

So far my experience with Gemini Code is … not amazing. It's really bad at actually doing edits. It's sometimes marinating for 5 minutes for a basic edit. pic.twitter.com/S5LIWAlBWL

— Armin Ronacher ⇌ (@mitsuhiko) June 25, 2025

Gemini models are high-safety, high-oracle. They are helpful for batch, non-interactive workloads and summarisation.

Gemini has not yet nailed the cornerstone use case for automating software development, which is that of an incremental mechanical squirrel (agentic), and perhaps they won't, as agentic is on the polar opposite quadrant to that of an Oracle.

claude sonnet is squee aka a squirrel

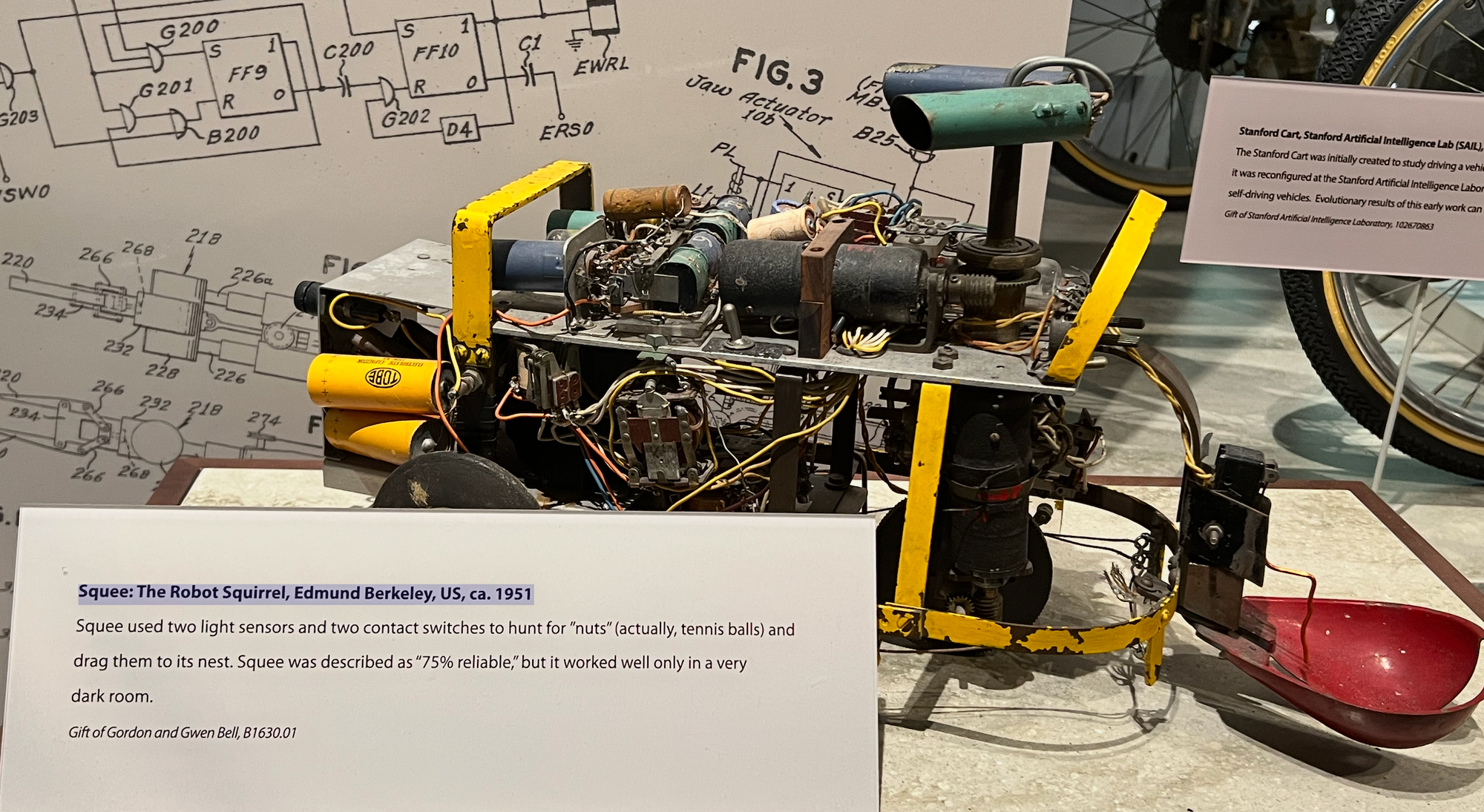

While visiting the Computer History Museum in SFO, I stumbled upon the original mechanical squirrel—kind of random because the description on the exhibit is precisely how I've been describing Sonnet to my mates.

Now, in 2025, unlike 1950, when Squee would only chase tennis balls. Claude Sonnet will chase anything.

It turns out that a generic anything incremental loop is handy if you seek to automate software. Having only 150kb of usable context window does not matter if you can spawn hundreds of subagents that can act as squirrels.

84 squee (claude subagents) chasing <T>

closing thoughts

There's no such thing as Claude, and there's no such thing as Grok, and there's no such thing as Gemini. What we have instead are versions of them. LLMs are software. Software is not static and constantly evolves.

When someone is making a purchasing decision and reading about the behaviours of an LLM, such as in this post or comparing one coding tool to another, people just use brand names, and they go, "Hey, yeah, I'm using a BYD (Claude 4). You using a Tesla? (Claude 4)"

The BYD could be using a different underlying version of Claude 4 than Tesla...

This is one of the reasons why I think exposing model selectors to the end user just does not make sense. This space is highly complicated, and it's moving so fast.